Want to listen to the full blog in podcast format? Listen here:

Consider this paradox: 91% of mid-market companies have adopted generative AI, yet 92% encountered significant challenges during implementation, and 63% admit they are inadequately prepared [1]. This disconnect represents billions in unrealized value and competitive advantage left on the table. For mid-market CEOs, CISOs, and technology leaders, the question is no longer whether to adopt AI, but how to do so in a way that delivers both immediate returns and transformative growth.

The answer lies in a dual-track approach that balances calculated quick wins with ambitious long-term initiatives, all anchored in practical oversight and accountability structures.

The Mid-Market AI Readiness Gap

The 2025 RSM Middle Market AI Survey reveals troubling realities beneath widespread adoption. While a quarter of organizations report AI is fully integrated into core operations, the majority navigate without adequate preparation. The most common obstacles are foundational: data quality issues plague 41% of implementations, privacy and security concerns affect 39%, and insufficient internal expertise hampers 35% [1].

Perhaps most revealing: 70% of mid-market firms using AI recognize they need external support to maximize its potential [1]. The cost of poor implementation extends beyond wasted resources to reputational damage, regulatory penalties, and the entrenchment of biased systems that become harder to fix over time.

Strategic Framework: Quick Wins vs. Moonshots

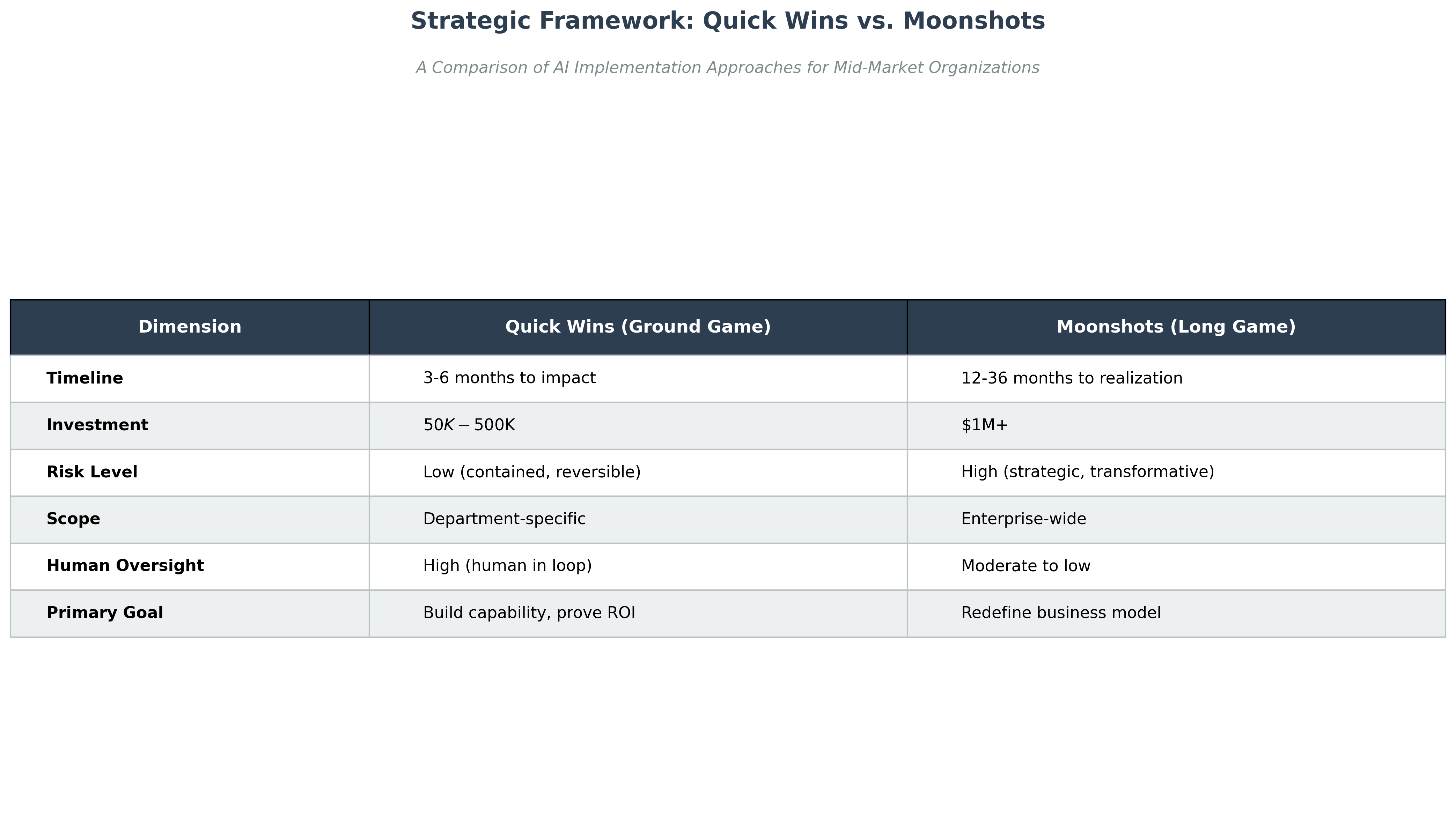

These are not merely different scales of the same activity but fundamentally different strategic approaches with distinct risk profiles and organizational implications.

The Ground Game: Strategic Quick Wins

Quick wins generate immediate ROI while building organizational muscle memory for ambitious initiatives. MIT Sloan researchers document three high-value, low-risk categories [2]:

Tasks Common Across Roles represent the lowest-hanging fruit. AI-powered meeting summarization, document synthesis, and routine communication drafting can deliver 20-30% productivity gains in knowledge work with minimal customization.

Specialized Applications offer higher returns with moderate risk. CarMax uses generative AI to synthesize thousands of customer reviews into concise summaries displayed on vehicle research pages, improving both customer experience and internal insights without significant risk [2]. In financial services, firms deploy AI to generate compliance reports and review contracts, improving efficiency and accuracy while maintaining human oversight at critical decision points.

Internal Innovation Platforms represent the most sophisticated quick-win approach. Colgate-Palmolive created an internal AI Hub requiring training on responsible AI use before access. The company uses retrieval-augmented generation to query vast datasets of consumer research and market data. Most innovatively, Colgate-Palmolive tests new product concepts on digital consumer twins, dramatically accelerating R&D while reducing costs [2].

These quick wins generate data, expertise, and organizational confidence necessary for moonshots. They also surface foundational gaps in data quality, oversight structures, and skills that must be addressed.

The Long Game: Transformative Moonshots

Moonshots represent fundamental reimagining of how organizations create value and make decisions. These initiatives carry substantial risk but offer transformative competitive advantage.

Liberty Mutual deploys an "intelligent choice architecture" that assists claims adjusters in triaging calls, identifying patterns across thousands of claims, and presenting decision options with explicit trade-offs. This augments human judgment with insights no individual could generate manually, continuously learning and improving recommendations [2].

Sanofi uses AI to tackle cognitive bias in corporate decision-making. The company's systems guide managers in optimizing investments by surfacing data that challenges assumptions and helps overcome the sunk-cost fallacy, leading to more strategic resource allocation and willingness to abandon underperforming projects [2].

These moonshots require substantial upfront investment in data infrastructure, model training, and change management. They operate with reduced human oversight, making robust controls and accountability essential. They generate compounding returns as systems learn and create competitive advantages difficult for rivals to replicate.

Implementing Practical Oversight and Accountability

Organizations with effective AI management frameworks are 75% more likely to report improved outcomes [4]. The business case rests on three pillars identified by EY [3]:

Realization demands organizations recognize their responsibility as technology stewards while maintaining rigorous focus on value creation. This means establishing clear AI performance metrics, conducting regular system audits, and maintaining discipline to shut down failing initiatives. A U.S. Government Accountability Office study found agencies implementing AI risk management practices experienced fewer incidents and reduced costs [3].

Reputation has become a strategic asset. AI systems exhibiting bias, lacking explainability, or producing harmful outcomes inflict lasting brand damage. Companies must implement fairness metrics, train systems on diverse datasets, and conduct regular bias audits. This is critical for customer-facing applications where AI errors become public relations crises.

Regulation has evolved from future concern to present reality. The EU AI Act imposes fines up to 7% of global turnover or €35 million [3]. The U.S. Federal Trade Commission issues increasingly specific guidance on AI use, emphasizing transparency and fairness [3]. Proactive management and clear guardrails are the only viable strategy.

Translating principles into practice requires assembling cross-functional teams including legal, risk, technology, and business representatives. Develop clear policies defining acceptable use cases, data standards, and approval processes. Establish tiered oversight where low-risk applications receive streamlined approval while high-risk moonshots undergo rigorous review. Invest in data quality, model monitoring infrastructure, and employee training as prerequisites for responsible scaling.

Common Pitfalls to Avoid

The 92% challenge rate follows patterns [1]. Data quality issues sink more projects than any other factor. Organizations must ensure data is clean, representative, and properly managed before model development. Lack of clear ownership creates ambiguity about responsibility for AI outcomes. Every initiative needs an executive sponsor and accountable cross-functional team. Insufficient change management dooms even technically successful implementations.

Most critically, organizations fail when pursuing moonshots without first securing quick wins. The capabilities, learning, and political capital from early successes are essential prerequisites. Attempting to leapfrog this progression dramatically increases failure risk.

From Strategy to Execution

Begin by assessing AI readiness across data infrastructure, oversight maturity, and internal expertise. Identify 2-3 quick-win opportunities aligned with strategic priorities that demonstrate measurable value within six months. Use these to build capability and surface gaps.

Simultaneously, establish the oversight foundations: form cross-functional teams, develop clear policies and guardrails, and invest in data quality and monitoring infrastructure. As quick wins generate momentum, plan moonshots grounded in capabilities and lessons from earlier successes.

The competitive stakes are substantial. AI is reshaping industries now. Organizations successfully navigating the dual-track approach will emerge stronger and more competitive. Those that delay will find themselves increasingly disadvantaged as AI-native competitors redefine customer expectations and operational standards.

The journey begins with a single, well-chosen step. The question is whether your organization will embrace AI strategically, responsibly, and with the clarity necessary to unlock its transformative potential.

References

[1] RSM US. (2025). 2025 RSM Middle Market AI Survey. https://rsmus.com/newsroom/2025/middle-market-firms-rapidly-embracing-generative-ai-but-expertise-gaps-pose-risks-rsm-2025-ai-survey.html

[2] MIT Sloan Management Review. (2025). Practical AI implementation: Success stories from MIT Sloan Management Review. https://mitsloan.mit.edu/ideas-made-to-matter/practical-ai-implementation-success-stories-mit-sloan-management-review

[3] EY. (2025). The business case for responsible AI. https://www.ey.com/en_us/insights/ai/the-business-case-for-responsible-ai

[4] Gartner. (2023). AI Governance Frameworks For Responsible AI. (As cited in EY's "The business case for responsible AI")