Want to listen to the full blog in podcast format? Listen here:

In the race to adopt artificial intelligence, it’s easy to view compliance as just another box to check. But for today's business leaders, that's a dangerously outdated perspective. AI compliance is no longer a simple, one-size-fits-all checklist; it's a strategic imperative that demands a global, forward-looking approach. As regulations evolve at breakneck speed, a reactive stance can cost you time, money and customers.

Many organizations start with a solid foundation, following established frameworks like the NIST AI Risk Management Framework. They set up governance boards, audit their models, and work to build a culture of ethics. These steps are essential, but they are no longer enough. The ground is shifting. From the EU's sweeping AI Act to new management standards like ISO/IEC 42001, the definition of "good" AI governance is being rewritten on a global scale.

This post will guide you beyond the foundational steps. We'll explore the critical additions you need to make to your AI compliance strategy, ensuring your organization can not only mitigate risk but also build trust, drive innovation, and maintain a competitive edge in the age of AI.

The Foundation: A 10-Step Plan For Structured Compliance

First, let's acknowledge the fundamentals. A well-structured AI compliance program is built on a proven set of principles. Our research confirms that a ten-step framework, validated against authoritative sources like the NIST AI RMF and the Institute of Internal Auditors (IIA) AI Auditing Framework, provides a robust starting point. These steps cover the essentials:

- Understanding the Regulatory Landscape

- Establishing Governance Structures

- Auditing and Documenting AI Systems

- Implementing Risk Management

- Strengthening Data Governance

- Adopting Explainability

- Developing Incident Response

- Conducting Independent Audits

- Building a Compliance Culture

- Staying Ahead of Emerging Standards

If your organization is addressing these ten areas, you are on the right track. But in the current environment, this is the starting line, not the finish line.

Beyond the Basics: Three Essential Upgrades for Your AI Strategy

To truly future-proof your organization, you need to look beyond the foundational checklist. Here are three critical areas that demand your attention now.

1. The EU AI Act: The Global Rulebook is Already Here

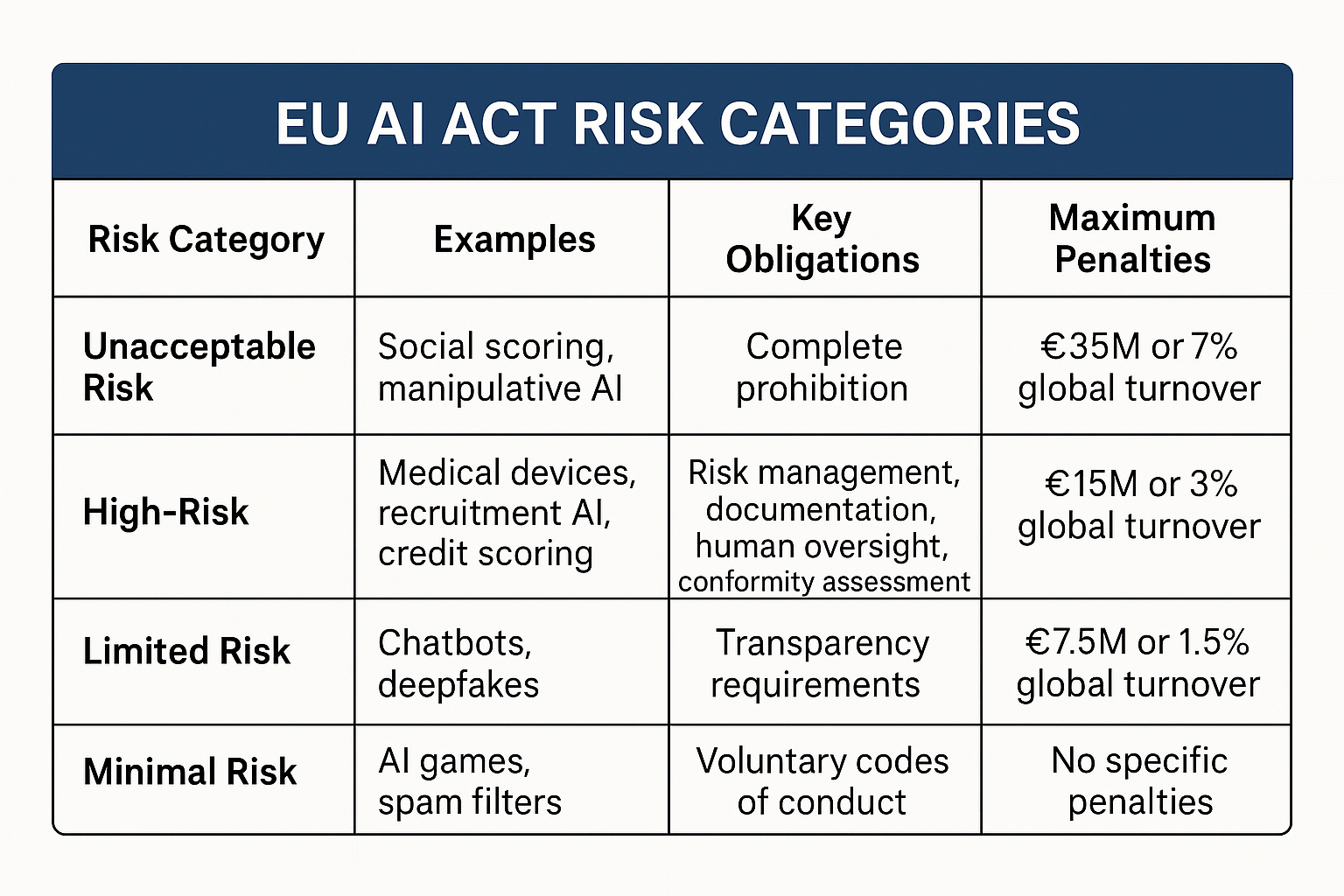

Even if you don't do business in Europe, you need to pay attention to the EU AI Act. It's the most comprehensive piece of AI legislation to date, and its influence is already shaping global standards. The Act's core is a risk-based approach, which categorizes AI systems into four tiers. For leaders, this means you must know where your AI systems fall.

- Unacceptable Risk: These systems are simply banned. Think social scoring or manipulative AI that exploits user vulnerabilities.

- High-Risk: This is where most of the regulatory burden lies. If your AI is used in critical areas like medical devices, recruitment, or credit scoring, you'll face strict obligations. These include rigorous risk management, data governance, transparency, and human oversight.

- Limited Risk: For systems like chatbots, the key is transparency. Users must know they are interacting with an AI.

- Minimal Risk: Most AI applications fall here and are largely unregulated.

The EU AI Act has an extraterritorial reach, meaning if your AI's output is used in the EU, you must comply. This isn't just a legal hurdle; it's a new global standard for responsible AI.

The financial stakes are substantial. Organizations that violate the EU AI Act face fines of up to €35 million or 7% of global annual turnover, whichever is higher. For context, this means a company with $10 billion in annual revenue could face penalties of up to $700 million for non-compliance. These aren't theoretical risks, the EU has already demonstrated its willingness to impose significant penalties, with GDPR fines exceeding €4.5 billion since 2018.

2. ISO/IEC 42001: The "How-To" Guide for AI Governance

If the EU AI Act tells you what to do, ISO/IEC 42001 tells you how to do it. This new international standard provides a blueprint for creating an Artificial Intelligence Management System (AIMS). Think of it as the operational manual for responsible AI.

Adopting an AIMS is about building a structured, repeatable process for managing AI risks and opportunities. For leaders, pursuing ISO 42001 certification sends a powerful message to regulators, customers, and partners: you are serious about responsible AI. It demonstrates due diligence, builds trust, and provides a clear competitive advantage.

The business case is compelling. According to recent industry research, organizations with mature AI governance frameworks report 23% fewer AI-related incidents and 31% faster time-to-market for AI products. Companies that achieve ISO certifications typically see a 15-20% reduction in insurance premiums and experience 40% fewer regulatory audit findings. Moreover, 73% of enterprise buyers now consider AI governance certifications when selecting vendors, making compliance a direct revenue driver.

3. The Global Patchwork: One Size Does Not Fit All

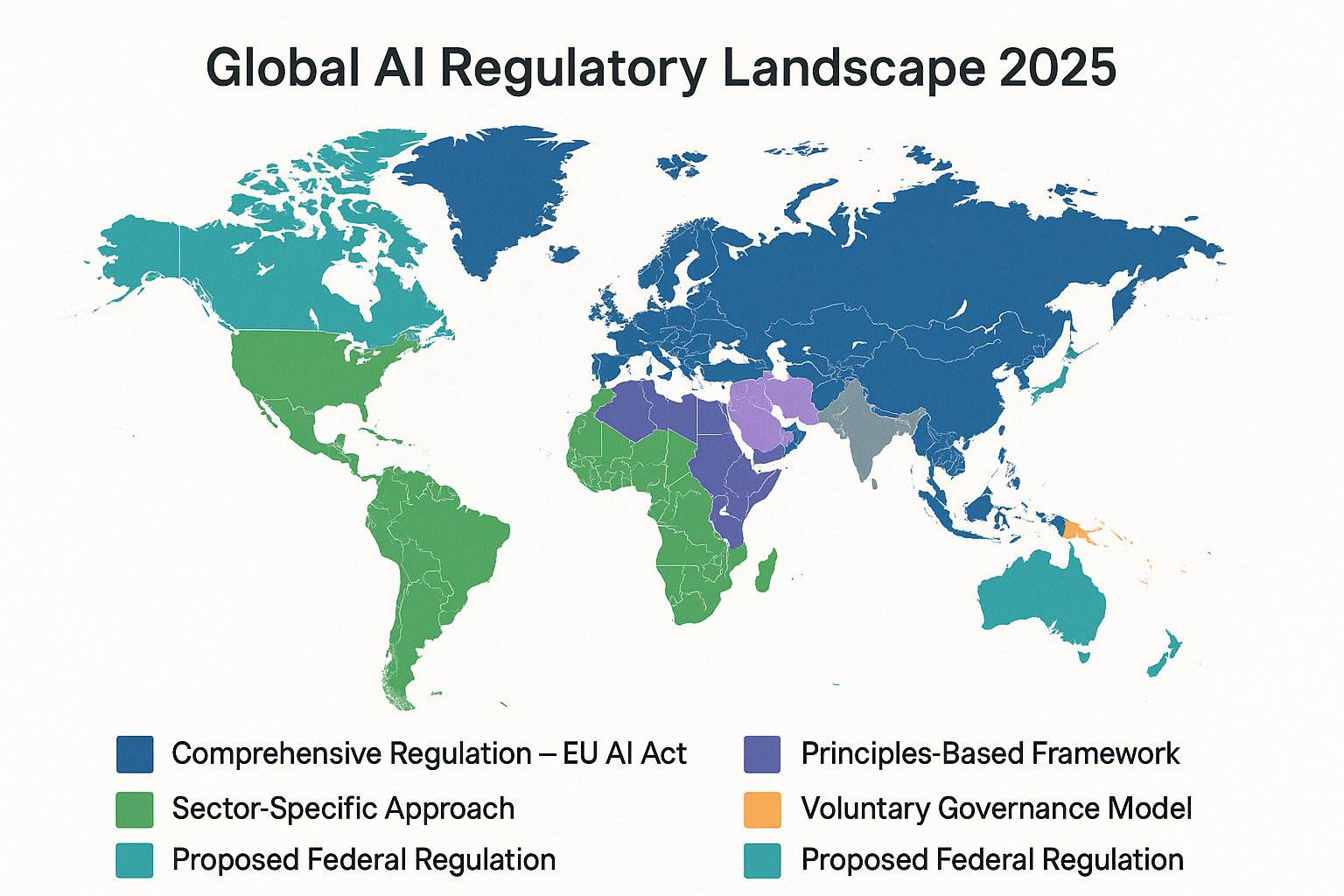

Finally, it's crucial to recognize that the world is not moving in lockstep. While the EU has set a high bar, other nations are carving their own paths:

- The United States is taking a more sector-specific, market-driven approach, empowering agencies like the FTC and FDA to regulate AI within their domains.

- The United Kingdom is promoting a flexible, "pro-innovation" framework that relies on existing regulators to create context-specific rules.

- Singapore and Canada are also advancing their own comprehensive AI governance models, focusing on practical, industry-focused guidance.

This fragmented landscape means that a one-size-fits-all compliance strategy is doomed to fail. Organizations operating globally must remain agile, monitoring developments in every jurisdiction and tailoring their approach accordingly.

The Cost of Inaction: Why Waiting Isn't an Option

The financial impact of AI compliance failures extends far beyond regulatory fines. A recent study by Deloitte found that organizations experiencing AI-related compliance issues face an average of $14.2 million in direct costs, including legal fees, remediation expenses, and business disruption. Additionally, companies suffer an average 12% drop in stock price following major AI governance failures, and 67% report lasting damage to customer trust that takes 18-24 months to rebuild.

Conversely, proactive AI governance delivers measurable returns. Organizations with mature AI compliance programs report 28% higher customer retention rates and command a 15% premium in B2B contract negotiations due to enhanced trust and reduced vendor risk.

Your Playbook: 5 Strategic Moves for AI Leaders

Given these stakes, navigating this complex environment requires decisive action. Here are five strategic moves every leader should make today:

- Centralize Your AI Governance. Don't let AI strategy be a siloed effort. Create a cross-functional team with representatives from legal, compliance, IT, and business units to oversee all aspects of AI governance.

- Adopt a Risk-Based Mindset. Not all AI is created equal. Use the risk-based approach pioneered by the EU AI Act to classify your AI systems and focus your compliance efforts where they matter most.

- Build Your AI Management System. Don't just create policies; build a system. Start implementing the principles of ISO/IEC 42001 to create a structured, repeatable, and defensible process for managing AI.

- Scan the Global Horizon. Your compliance team needs to have the flexibility to become a global intelligence unit upon request. Continuously monitor regulatory developments in every market you operate in, and be prepared to adapt your strategy accordingly.

- Invest in Your People. Your employees are your first line of defense. Invest in training to ensure everyone from your data scientists to your sales team understands your AI policies and their role in responsible AI.

The Future is Proactive

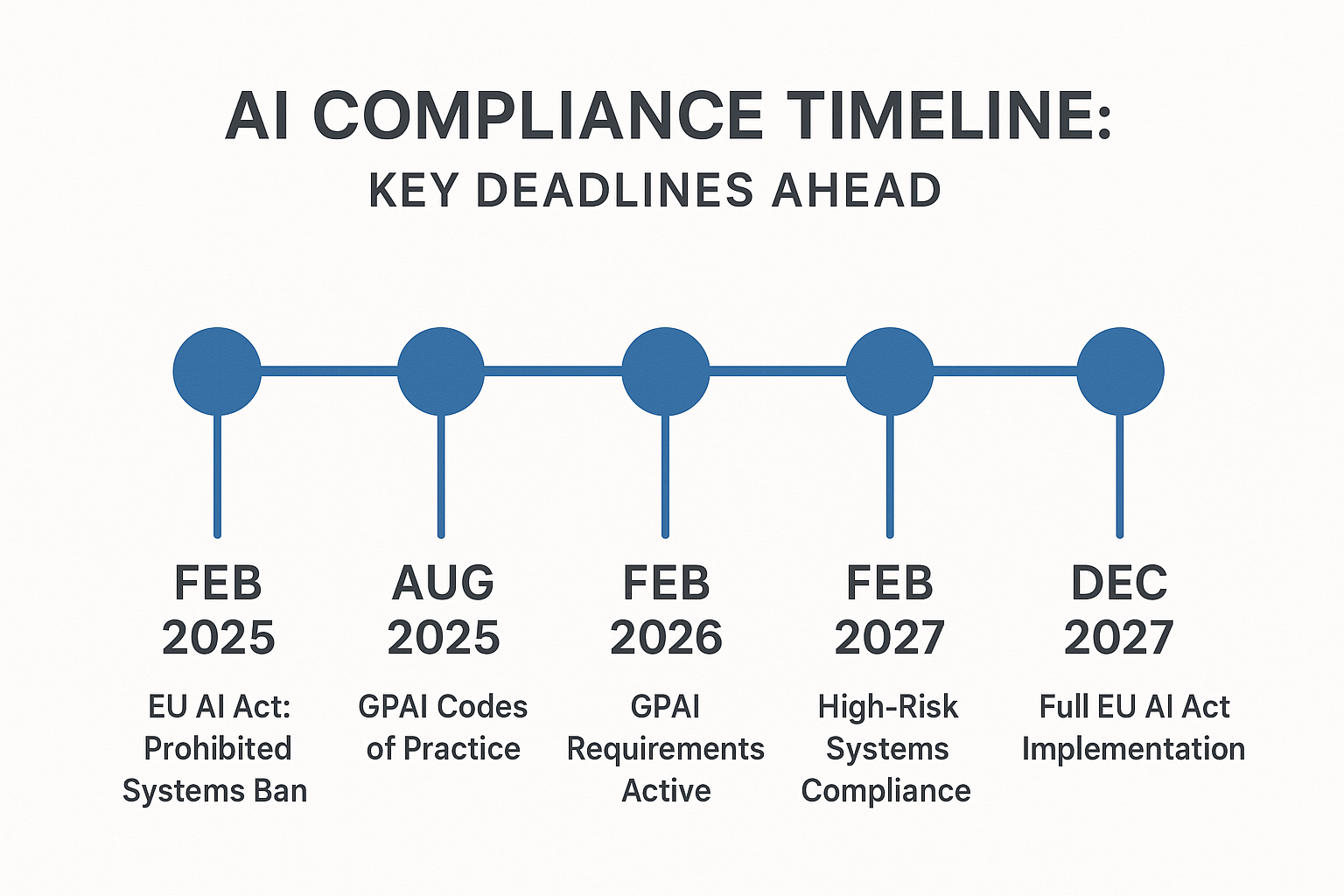

The era of reactive, checklist-based AI compliance is over. With the EU AI Act's first prohibitions already taking effect and penalties potentially reaching into the hundreds of millions, the stakes have never been higher. Yet the organizations that view this as merely a compliance burden are missing the bigger picture.

The future belongs to organizations that are proactive, strategic, and globally aware. By moving beyond the basics and embracing a comprehensive, forward-looking approach to AI governance, you can not only navigate the complex regulatory landscape but also build a foundation of trust that will unlock the full transformative potential of artificial intelligence.

The data is clear: organizations with mature AI governance frameworks outperform their competitors across impactful metrics. They move faster, build stronger customer relationships, and command premium pricing in the market.

The time to act is now. Start with a comprehensive AI system audit, establish your risk classification framework, and begin building the governance infrastructure that will serve as your competitive advantage in the AI-driven economy. Your future market position depends on the decisions you make today.

This blog post is based on a comprehensive review of AI compliance frameworks and regulations. For organizations looking to implement these strategies, we recommend consulting with legal and compliance professionals familiar with your specific industry and jurisdictions.

References

[1] NIST. (2023). Artificial Intelligence Risk Management Framework (AI RMF 1.0). https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

[2] The Institute of Internal Auditors. (2024). The IIA's Artificial Intelligence Auditing Framework. https://www.theiia.org/globalassets/site/content/tools/professional/aiframework-sept-2024-update.pdf

[3] Allan, K. (2024, August 13). AI Governance: Mitigating AI Risk in Corporate Compliance Programs. GA Integrity. https://www.ganintegrity.com/resources/blog/ai-governance-mitigating-ai-risk-in-corporate-compliance-programs/

[4] Insight Assurance. (2025, March 25). A Comprehensive Guide to AI Risk Management Frameworks. https://insightassurance.com/insights/blog/a-comprehensive-guide-to-ai-risk-management-frameworks/

[5] EU Artificial Intelligence Act. (2024). High-level summary of the AI Act. https://artificialintelligenceact.eu/high-level-summary/

[6] International Organization for Standardization. (2023). ISO/IEC 42001:2023 - AI management systems. https://www.iso.org/standard/42001